BoostDFO

October 2018 - September 2022

Team

Doctoral Members: Ana Luísa Custódio (PI), Pedro Medeiros (co-PI), Maria do Carmo Brás, Rohollah Garmanjani, and Vítor Duarte

Students: Aboozar Mohammadi, Everton Silva, Sérgio Tavares,Tiago Cordeiro, Nelson Santos, and Bruno Baptista

Consultants: Milagros Loreto (University of Washington Bothell) and Luís Nunes Vicente (Lehigh University)

Inspired by successful approaches used in single objective local Derivative-free Optimization, and resourcing to parallel/cloud computing, new numerical algorithms will be proposed and analyzed. As result, an integrated toolbox for solving multi/single objective, global/local Derivative-free Optimization problems will be available, taking advantage of parallelization and cloud computing, providing an easy access to several efficient and robust algorithms, and allowing to tackle harder Derivative-free Optimization problems.

Related publications

Papers

- A. Mohammadi and A. L. Custódio, A trust region approach for computing Pareto fronts in Multiobjective Optimization, Computational Optimization and Applications, 87 (2024) 149 - 179 PDF

- A. L. Custódio, E. H. M. Krulikovski, and M. Raydan, A hybrid direct search and projected simplex gradient method for convex constrained minimization, Optimization Methods and Software 39 (2024) 534 - 568 PDF

- R. Garmanjani, E. H. M. Krulikovski, and A. Ramos, On stationarity conditions and constraint qualifications for multiobjective optimization problems with cardinality constraints, Applied Mathematics & Optimization 91 (2025), article 22 PDF

- A. L. Custódio, R. Garmanjani, and M. Raydan, Derivative-free separable quadratic modeling and cubic regularization for unconstrained optimization, 4OR, 22 (2024) 121 - 144 PDF

- R. Garmanjani, Complexity bound of trust-region methods for convex smooth unconstrained multiobjective optimization, Optimization Letters, 17 (2023) 1161 - 1179 PDF

- E. J. Silva, E. W. Karas, and L. B. Santos, Integral global optimality conditions and an algorithm for multiobjective problems, Numerical Functional Analysis and Optimization, 43 (2022) 1265 - 1288 PDF

- S. Tavares, C. P. Brás, A. L. Custódio, V. Duarte, and P. Medeiros, Parallel strategies for Direct Multisearch, Numerical Algorithms, 92 (2023) 1757-1788 PDF

- R. Andreani, A. L. Custódio, and M. Raydan, Using first-order information in Direct Multisearch for multiobjective optimization, Optimization Methods and Software, 37 (2022) 2135 - 2156 PDF

- A. L. Custódio, Y. Diouane, R. Garmanjani, and E. Riccietti, Worst-case complexity bounds of directional direct-search methods for multiobjective derivative-free optimization, Journal of Optimization Theory and Applications, 188 (2021) 73 - 93 PDF

- C. P. Brás and A. L. Custódio, On the use of polynomial models in multiobjective directional direct search, Computational Optimization and Applications, 77 (2020) 897 - 918 PDF

- R. Garmanjani, A note on the worst-case complexity of nonlinear stepsize control methods for convex smooth unconstrained optimization, Optimization, 71 (2022) 1709 - 1719 PDF

- R. G. Begiato, A. L. Custódio, and M. A. Gomes-Ruggiero, A global hybrid derivative-free method for high-dimensional systems of nonlinear equations, Computational Optimization and Applications, 75 (2020) 93 - 112. PDF

Theses

- S. Tavares, Contributions to the development of an integrated toolbox of solvers in Derivative-free Optimization, NOVA School of Science and Technology, July 2020 PDF

- T. Cordeiro, A global optimization algorithm using trust-region methods and clever multistart, NOVA School of Science and Technology, November 2020 PDF

- N. Santos, Contributions in global derivative-free optimization to the development of an integrated toolbox of solvers, NOVA School of Science and Technology, November 2021 PDF

- B. Baptista, Incorporating radial basis functions in global and local direct search, NOVA School of Science and Technology, December 2022 PDF

- A. Mohammadi, Trust-region Methods for Multiobjective Derivative-free Optimization, NOVA School of Science and Technology, February 2024 PDF

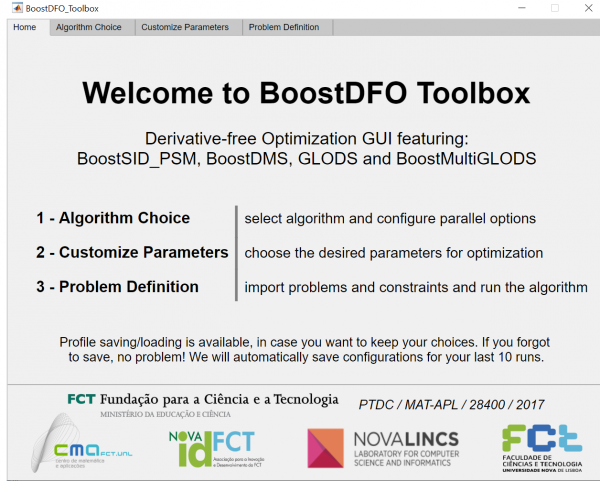

Computational toolbox and codes

BoostDFO toolbox comprises solvers for global/local single/multi objective Derivative-free Optimization, allowing to a non-experienced user to take advantage of a suite of robust and efficient solvers, without the burden of mastering all the algorithmic options. Parallel implementations are already available for some of the solvers.

Solvers included: BoostDMS, BoostSID_PSM, BoostGLODS, and BoostMultiGLODS

Version 0.2, December 2020 (written in Matlab; request by sending an e-mail)

BoostDMS is a multiobjective optimization solver which does not use any derivatives of the objective function components.The algorithm defines a search step for Direct Multisearch (DMS) based on the minimization of quadratic polynomial models, built for the different components of the objective function.

Problem class: derivative-free multiobjective optimization problems with (or without) any type of constraints.

Version 0.3, December 2020 (written in Matlab; includes parallelization of function evaluation, with possibility of simultaneously selecting more than one poll center; request by sending an e-mail)

BoostSID_PSM is a single objective optimization solver which does not use any derivatives of the objective function. The algorithm defines a search step based on the minimization of quadratic polynomial models and uses an efficient order of the poll directions.

Problem class: derivative-free single objective optimization problems with (or without) any type of constraints

Version 0.2, September 2020 (written in Matlab; includes parallelization of function evaluations; request by sending an e-mail)

BoostGLODS is a single objective global optimization solver which does not use any derivatives of the objective function. The algorithm uses a clever multistart strategy, where new searches are initialized but not always conducted until the end. Local optimization is based on directional direct search. Global optimization is based on Radial Basis Functions.

Problem class: global single objective derivative-free optimization problems with bound constraints

Version 0.2, December 2022 (written in Matlab; includes parallelization of function evaluations; request by sending an e-mail)

BoostMultiGLODS is a global multiobjective optimization solver which does not use any derivatives of the objective function components. The algorithm uses a clever multistart strategy, where new searches are initialized but not always conducted until the end. Local optimization is based on direct multisearch.

Problem class: global multiobjective derivative-free optimization problems with bound constraints

Version 0.1, September 2020 (written in Matlab; includes parallelization of function evaluations and new strategies for the selection of the poll center; request by sending an e-mail)